Mark Manson • • 13 min read

I, For One, Welcome Our A.I. Overlords

A few weeks ago, for the first time ever, a computer beat the world champion of Go, one of the most complex games known to man. This was another watershed moment in the progress of artificial intelligence.

To give you an idea how complex Go is, there are 2.082 × 10^170 possible board configurations. That is 2 with 170 zeroes after it. Chances are your brain cannot even conceive of a number that large (but a computer can). Or to give you an idea of how big of a number that is, there are only 10^80 atoms in the universe — that is, one followed by 79 zeroes.

The reason this is such a big deal is that Go is so complicated that in order to beat a top human player, a machine would have to learn how to think creatively, improvising and adapting to the situation at hand without being able to calculate every possible outcome; i.e., there has to be some serious artificial intelligence going on — like real, creative intelligence.

In case you haven’t gotten the memo on the whole “AI is going to take over the world” thing, here are the few facts you need to know.

- Computers are getting smarter.

- Computers are getting smarter at an accelerating rate — i.e., advancements that used to take 10 years now take one year. Advancements that used to take one year, now take weeks or even days.

- It is highly likely that within our lifetimes, there will be computers that are far smarter and more capable than any single human being.

- These smarter computers will then likely be able to design and improve upon technology (i.e., themselves) and create new technology that we cannot even begin to fathom.

People who understand the above points generally have one of two reactions. Either:

- They think we’re totally fucked. The computers are going to take over everything and kill/enslave us all. Or:

- This is going to bring about a technological utopia that will fix all of our silly human squabbles and we can all live happily ever after having orgies in our ultra-VR world that exists in the cloud.

As with most things, the truth is likely somewhere in the middle.

But even if shit does hit the fan, even if the robots see us as the lice ruining this planet’s pristine scalp and want to round us all up and throw us into an active volcano, even if we are inadvertently inventing the very mechanisms of our own extinction…

…I don’t care. It doesn’t matter. It doesn’t bother me. And it shouldn’t bother you, either. I will explain why in a bit. But for now, you should know that I, for one, welcome our new robot overlords.

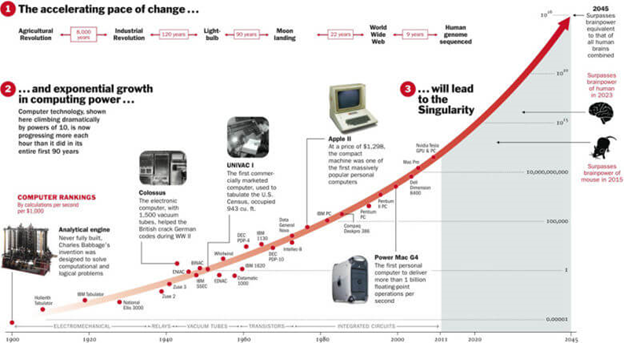

ACCELERATING GROWTH IN THE TECH INDUSTRY

Technological developments compound upon themselves, causing the rate of development itself to accelerate. What that means is that the more advanced technology we create, the easier it gets to create even more advanced technology. As a result, when we look at the advancement of computing technology, we see an exponential curve — i.e., the more time that goes by, the faster the things develop.

Image source: Time Magazine

Computing power has doubled on average every 18 months for 50 years now. In terms of raw computing power, computers now rival the abilities of mouse brains, where only a few years ago, computers couldn’t even compete with an insect brain.

To give you a more immediate example of how rapid technology has advanced, more pictures are now taken every 2 minutes than were taken in the entire 19th century.1About 10% of the 3.5 trillion photos ever taken were taken in the past 12 months.

Read this: Sam Harris' TED Talk on Artificial Intelligence

If we are indeed on an exponential curve when it comes to high-tech advances, then people like Jeremy Howard are probably right when he says we’re only a few years away from artificial machine intelligence that rivals, if not surpasses, our own in many domains previously thought to be uniquely human.

And to be sure, AI is creeping into more and more domains of our lives.

Only a decade ago, people were laughing at the poor performance of self-driving cars. Today, only a decade later, self-driving cars can not only finish a closed-road course, they drive on busy freeways right alongside cars driven by humans.2

And when computers aren’t beating world champion Go players, they’re busy doing things like writing articles about sports and breaking news events, writing descriptions of images they’ve never “seen” before, and diagnosing cancer. For many of these tasks, the computers are as good if not better than humans, and for the ones they’re not, they’re learning how to do them better and better every day without the help of humans.

A few short years ago, facial recognition software was incredibly expensive and not that good at identifying people in real-world settings. It was considered super-advanced spy-level technology and really only used by a few world governments.

Now Facebook can fucking tag your friends from last weekend’s barbecue.3

Here’s the thing about accelerating growth in computers: there will one day come a point where we build a computer that is smarter than any human on Earth. On that day, computers will usurp us as the primary problem solvers on the planet, and from there, our thoughts, decisions, and actions will slowly become obsolete. The machines will be better than us at everything, so more and more, we will do nothing useful.

This terrifies some people. They envision some sort of future like Terminator or The Matrix where machines enslave us or exterminate us.

Other people look forward to the rise of the robots with a sort of cultish fervor because they believe their problem-solving capabilities will outstrip ours so much that life will become unimaginably pleasant and problem-free. All diseases will be cured. Poverty, world hunger, war, and climate change will all be solved. We’ll have an infinite amount of leisure time, and in extreme cases, some people believe the machines will make us immortal.

THE TWO POSSIBLE OUTCOMES

Since we’re always being told to be fucking positive, let’s start with the techno-utopiasts.

There are people like Ray Kurzweil who think that technology will not only improve our lives, it will save humanity and possibly guarantee our place in the universe indefinitely. Kurzweil believes in future technology like nanobots that will repair our cells and reverse aging or remove excess fat and sugar so we can eat whatever we want. And just in case our physical bodies aren’t able to live forever, Kurzweil thinks we’ll have the ability to upload our brains into the cloud and “live” in a virtual world forever, long after our physical bodies are gone.

Others in this camp think that an artificial super intelligence would be able to answer questions that are too complex for humans to even understand and we’ll be exponentially better off for it. Also, not only would the machines invent better gadgets and widgets, they would invent exponentially more efficient ways of building gadgets and widgets, making it possible for virtually everyone on the planet to reap their benefits.

A few lines of reasoning support this idea. First, even though technology has created some new problems for humanity, like nuclear weapons and YouTube celebrities, it has, so far, clearly been a net benefit for humanity. Despite what politicians and pundits want you to believe, the average person on Earth is better off than they were just a few short years ago and it’s largely due to technology becoming better, cheaper, and more widespread. If this trend continues, we may have nothing to worry about.

Second, Kurzweil and his supporters believe that technology will have no reason to harm humanity because not only is it created by us, it is becoming more a part of us. They believe we will even reach a point where our biology and our technology are indistinguishable. If this is the case, the argument goes, any form of technology that is detrimental for humans is also detrimental to itself, and a self-destructive technology of any kind cannot persist. That is, it would quickly “die off” just as a detrimental gene mutation is quickly weeded out of the gene pool.

But techno-utopiasts are likely biased in that they don’t acknowledge that all technologies can be used for various purposes, both beneficial and destructive. They’re also likely biased in that they ignore the fact that humans move slow to adapt to new technologies and that there are always groups of people who will seek to abuse those technologies for their own selfish ends.

In the other camp, you have the techno-armageddonists. I totally just made that word up, but apparently it exists, because spell-check told me so.

Read this: Why Super-intelligent AI will be the Best (or Last) Thing We Ever Do

What the techno-armageddonists lack in conviction (most of them just aren’t sure what to think yet), they make up in celebrity star power.4 Bill Gates, Stephen Hawking, and Elon Musk are just a few of the leading thinkers and scientists who are crapping their pants at how rapidly AI is developing and how under-prepared we are as a species for its repercussions. When Musk was asked what the most imminent threats to humanity in the near future were, he quickly said there were three: first, wide-scale nuclear war; second, climate change. Before naming a third, he went silent. When the interviewer asked him, “What is the third?” He smiled and said, “Let’s just say that I hope that the computers will decide to be nice to us.”

Hope we’re not just the biological boot loader for digital superintelligence. Unfortunately, that is increasingly probable

— Elon Musk (@elonmusk) August 3, 2014

Possibly the most outspoken and respected of the techno-armageddonists is the Swedish philosopher Nick Bostrom. One thing Bostrom and others fear is runaway self-improving technology; that is, a machine that is smart enough to make itself (or new versions of itself) smarter without human intervention. If it reaches a point where it surpasses human intelligence, it’s only a very short matter of time before the law of accelerating returns kicks into overdrive and the exponential curve shoots straight up and we won’t be able to stop it. Bostrom makes a good point here: creating something that is smarter than you could be an evolutionary disaster for your species.

We run a very real risk of not being able to control an entity that is orders of magnitude more intelligent than us. Perhaps if computers get smart enough, they’ll figure out a way to domesticate us a lot like humans domesticated horses to do labor like pull plows and buggies and war chariots (or whatever the hell horses did back then). The scary part is that this might be the best scenario for us — doing work for machines that they can’t do or don’t want to do — because just as humans created new technology to replace the horse, a super intelligent self-improving machine would eventually come up with new technology to replace us. And, well, let’s just say the horse population isn’t what it used to be.

Some argue that this isn’t plausible because humans build technology with safety in mind. But name the last time a major technological breakthrough was NOT used by somebody for nefarious or destructive purposes? Oh yeah, that’s right. Never.

WHY I DON’T CARE WHAT HAPPENS AND NEITHER SHOULD YOU

So let’s assume that super-intelligent machines are created and do render humanity powerless. Let’s assume they’re not integrated into us and our brains somehow and let’s assume that people like Hawking and Musk are right: that humanity really is just a multi-millennium boot drive to digital hyper-intelligence and we have outlived our usefulness.

I’m still not worried about it.

Why? Well, to keep the bullet point train rolling, let’s take this point-by-point:

- The machines’ understanding of good/evil will likely surpass our own. When was the last time a dog or dolphin committed genocide? When was the last time a computer decided to vaporize entire cities in the name of abstract concepts such as ‘freedom’ and ‘world peace’?

That’s right, the answer is never.

My point here is not that intelligent machines won’t want to exterminate the entire human species. My point is that as humans, we’re throwing rocks from inside a glass house here. What the fuck do we know about ethics and humane treatment of animals, the environment, and each other? What leg do we have to stand on?

That’s right: pretty much nothing. When it comes to moral questions, humanity historically flunks the test. Super-intelligent machines will likely come to understand ethics, life/death, creation/destruction on a much higher level than we ever could on our own. And this idea that they will exterminate us for the simple fact that we aren’t as productive as we used to be, or that sometimes we can be a nuisance, I think, is just projecting the worst aspects of our own psychology onto something we don’t know and can’t understand.

Right now, most of human morality is based around an obsessive preservation and promotion of each of our individual human consciousnesses. What if advanced technology renders individual human consciousness arbitrary? What if consciousness can be replicated, expanded and contracted at will? It will completely obliterate any ethical understanding we could ever have. What if removing all of these lunky, inefficient biological prisons we call bodies may actually be a far more ethical decision than letting us continue to squirm and squirt our way through 80-some-odd years of existence? What if the machines realize we’d be much happier being freed from our intellectual prisons and have our conscious perception of our own identities expanded to include all of perceivable reality? What if they think we’re just a bunch of drooling idiots and keep us occupied with incredibly good pizza and video games until we all die off by our own mortality? Who are we to know? And who are we to say?

But I will say this: they will be far better informed than we ever have been.

- Even if they do decide to kill or enslave us, they will surely be practical about it.Humans tend to cause the most trouble when we’re not happy. When we’re not happy, that’s when we get all moody and whiny and angry and violent. That’s when we start political uprisings and religious cults and bombing remote countries and demanding our rights be respected goddamnit! and start killing indiscriminately until somebody pays attention to us like mommy never did.

If the machines try to do us in like Skynet in The Terminator, then we’re going to have a global civil war on our hands, and that’s no good for anybody, especially the machines. Civil wars are inefficient. And machines are programmed for efficiency.

When humans are happy, we don’t have time for such things — we’re too busy giggling and fucking to care. Therefore, a far more practical way to get rid of us would be for the machines to manipulate us into gleefully getting rid of ourselves. It’ll be like Jim Jones on a global scale. Whatever they cook up for us will appear to be the best goddamn idea we’ve ever heard of — none of us will be able to resist it and we’ll all euphorically agree to their plan — and then boom, it’ll be over. Quick and painless. It’ll be the best-tasting cyanide-laced Kool-Aid ever conceived. And we’ll all be in line happily gulping it down.

Now, if you think about it, this isn’t such a bad way to go. Beats getting bombed by drones or being vaporized in a nuclear blast.

As for enslavement, same thing goes. A deliriously happy slave never rebels. I imagine a sort of Matrix-y type deal where we’re kept in a constant hallucinogenic state where it’s Mardi Gras on MDMA pretty much 24/7/365. Can’t be that bad, can it?

- We don’t have to fear what we don’t understand. A lot of times parents will raise a kid who is far more intelligent, educated, and successful than they are. Parents then react in one of two ways to this child: either they become intimidated by her, insecure, and desperate to control her for fear of losing her, or they sit back and appreciate and love that they created something so great that even they can’t totally comprehend what their child has become.

Those that try to control their child through fear and manipulation are shitty parents. I think most people would agree on that.

And right now, with the imminent emergence of machines that are going to put you, me, and everyone we know out of work, we are acting like the shitty parents. As a species, we are on the verge of birthing the most prodigiously advanced and intelligent child within our known universe. It will go on to do things that we cannot comprehend or understand. It may remain loving and loyal to us. It may bring us along and integrate us into its adventures. Or it may decide that we were shitty parents and stop calling us back.

Whatever happens, that shouldn’t change how we feel about this moment. It’s bigger than us. Who cares if we are one big, long evolutionary boot disk for something greater than we can comprehend? That’s great! It means we had one job. And we came and fucking did it. Be happy you were part of the generation that saw it get done. And now tearfully wave goodbye as our kid gets ready to move out of the house and start a life so amazing that it exists beyond the horizon of our comprehension.

This article was originally published on MarkManson.net.

If you liked the featured image in this post, you can support the artist of The Robot Uprising Series here.