Jordan Bates • • 4 min read

Sam Harris’ TED Talk on Artificial Intelligence Will Show You Why We Might Be Building Our Own Exterminator

“I’m going to describe how the gains we make in artificial intelligence could ultimately destroy us. And in fact I think it’s very difficult to see how they won’t destroy us.”

— Sam Harris

It’s fairly likely that you read the title of this post and thought, “Artificial intelligence? What’s this nonsense?”

I don’t fault anyone for having such a reaction. When I first began to encounter a number of highly intelligent people discussing the risks surrounding superintelligent AI, I was baffled, skeptical. This seems like some sci-fi foolery, I thought.

However, I continued to read the arguments of those who are worried about AI, and I continued to discover more brilliant people—everyone from Oxford philosopher Nick Bostrom to visionary entrepreneur Elon Musk—who are vocally concerned about AI.

After a substantial amount of research, I concluded that there are, in fact, a number of potentially disastrous scenarios that may arise, if we develop artificial intelligence which surpasses the cognitive abilities of human beings.

About three weeks ago, TED released a new talk by neuroscientist Sam Harris (whose podcast I recommend), in which Harris provides an engaging 15-minute introduction to the topic of superintelligent AI. He argues that given a long enough timescale (and assuming humanity doesn’t destroy itself first), we are extremely likely to develop a superintelligent AI. He then elaborates several of the arguments for why this could be a catastrophic development, if we aren’t sufficiently cautious and clever in designing the AI.

This topic is potentially one of paramount importance, as it has implications for the future of all life on Earth, and this talk is the best short introduction to the topic I’ve found. I highly encourage anyone with even a remote interest to take 15 minutes and watch the talk now:

More on Superintelligent AI

If you found that talk as fascinating as I did, you may want to delve deeper into the topic. If so, I really can’t recommend enough Wait But Why’s two-part essay series on artificial intelligence (Part 1 | Part 2). It’s an extremely accessible, in-depth exploration of the topic.

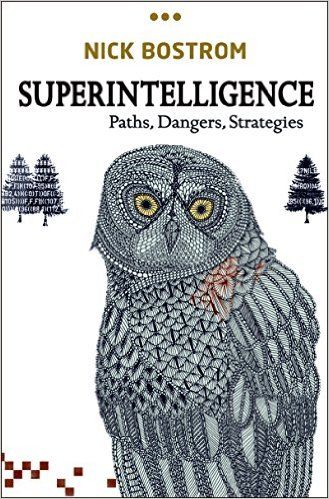

You may also want to take a look at Wikipedia’s page on superintelligence. And if you end up wanting to go deeper still, Nick Bostrom’s book, Superintelligence: Paths, Dangers, Strategies, is widely considered to be one of the foundational texts in contemporary AI theory.

Existential Risk

The risks surrounding superintelligent AI belong to a large category of risks known as “existential risks.“

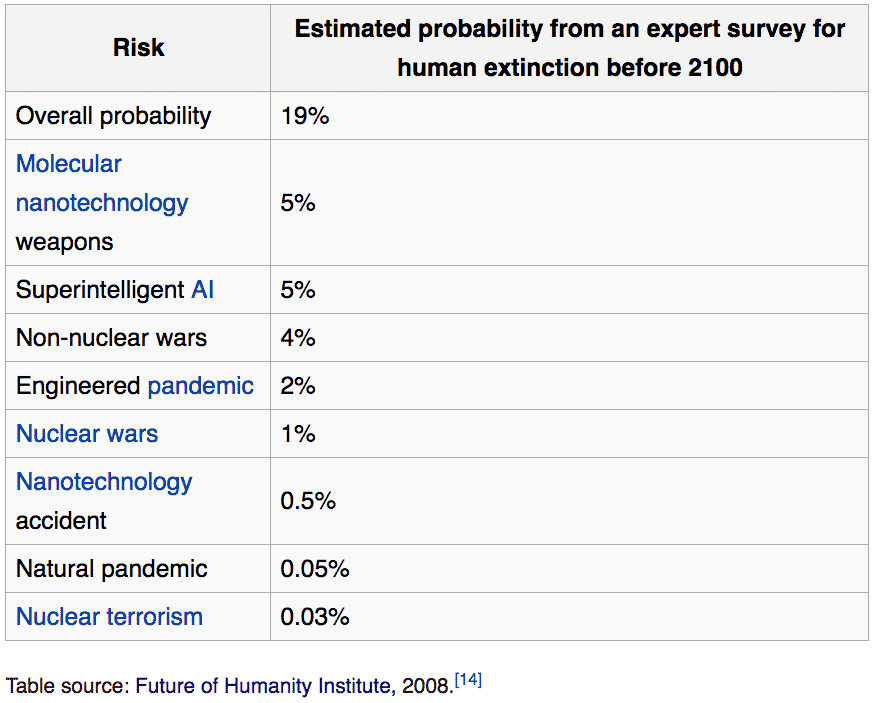

Although the world we occupy today is by many measures better than it’s been at any other time in human history, the risks we now face as a species are greater than ever. There are currently far more global catastrophic risks—what Nick Bostrom calls “existential risks”—than at any other point in history. In 2008, a group of experts at the Global Catastrophic Risk Conference at Oxford estimated that there is a 19% chance of human extinction before 2100.

These existential risks have led Elon Musk and others to believe that we must attempt to colonize space and transform humanity into a multi-planetary species. If our species were to erect a self-sustaining civilization on another planet, the chances of our extinction would be greatly reduced.

It is my sincerest hope that we grasp the enormity of these existential risks, address/avert them, and preserve intelligent earthly life into the deep future. I believe existential risk is the largest issue humanity faces now and for the foreseeable future. Thankfully, organizations such as the Future of Life Institute, the Future of Humanity Institute, the Center for the Study of Existential Risk, and the Global Catastrophic Risk Institute are researching existential risks and the most effective means of addressing/averting them. In my opinion, the average person can help mitigate existential risk by raising awareness (share articles like this one) and by living sustainably, minimalistically, compassionately, cooperatively, and non-dogmatically.

More on Existential Risk

If you’re interested to know/think more about existential risk, I recommend the following:

— Existential Risks: Analyzing Human Extinction Scenarios and Related Hazards by Nick Bostrom

— This list I compiled on Twitter of the best sources of information on existential risk

— The Wikipedia page on global catastrophic risk

— Vinay Gupta’s original two-part interview with the Future Thinkers Podcast (Part 1 | Part 2)

— Wait But Why’s two-part introduction to the prospect of superintelligent AI (Part 1 | Part 2)

— My essay on why humanity must become a multi-planetary species

— 80,000 Hours

— The Global Priorities project

— Sam Harris’ interview with Will MacAskill, the founder of effective altruism

If you’d like to learn to live minimalistically, compassionately, and non-dogmatically, check out our new course.

Jordan Bates

Jordan Bates is a lover of God, father, leadership coach, heart healer, writer, artist, and long-time co-creator of HighExistence. — www.jordanbates.life