Jordan Bates • • 15 min read

Why Politics and Religion Make Everyone Crazy and What You Can Do About It

Politics is a notoriously inflammatory topic of conversation. It’s often impossible to have a calm, rational discussion of political issues.

Why is this?

In short: the majority of people base their politics on a tribal identity.

They choose a political tribe—Red team, Blue team, whatever—and their membership in this tribe becomes an important part of their identity. They then have little choice but to blindly support every position or policy idea their tribe supports, lest they be ex-communicated from a tribe that is essential to their identity.

Tribalism, or “a way of thinking or behaving in which people are (excessively) loyal to their own tribe or social group,” is extremely prevalent in human social organization. In moderation, tribalism is normal and healthy. In excess, it can be catastrophic.

Tribalism: A Good Thing, Until it’s Not

As humans, we have a deep need to be part of some kind of tribe or community. The evolutionary psychology-related reasons for this are decidedly straightforward:we evolved in relatively small, tight-knit tribes

and were utterly dependent on them for our survival. For most of human history, supporting/defending one’s tribe was quite literally a matter of life or death.Nowadays, we don’t so much need tribes for our survival, but we do need them for our psychological well-being. We form tribes based on all kinds of things, and this is often a beautiful arrangement.

In an essay on tribalism, rationalist blogger Scott Alexander identified a few of the obvious benefits of membership in tribes:“Tribalism intensifies all positive and prosocial feelings within the tribe. It increases trust within the tribe and allows otherwise-impossible forms of cooperation… It gives people a support network they can rely on when their luck is bad and they need help. It lets you ‘be yourself’ without worrying that this will be incomprehensible or offensive to somebody who thinks totally differently from you. It creates an instant densely-connected social network of people who mostly get along with one another. It makes people feel like part of something larger than themselves, which makes them happy and can (provably) improve their physical and mental health.”

Tribes, or communities, are often wonderful. I love the HighExistence community and other communities of which I am a part.

But tribalism also has a dark side. In the essay I referenced a moment ago, Scott Alexander makes a brief comment on the dark side of tribalism:

“The dangers of tribalism are obvious; for example, fascism is based around dialing a country’s tribalism up to eleven, and it ends poorly. If I had written this essay five years ago, it would be titled ‘Why Tribalism Is Stupid And Needs To Be Destroyed’.”

When tribalism gets out of hand, things can get really ugly really fast. Tribalism arguably underlies many of the most atrocious wars, genocides, mass murders, and violent conflicts in human history. Harvard professor David Ropeik elaborates on this point in a poignant essay on tribalism published on Big Think:

“Tribalism is pervasive, and it controls a lot of our behavior, readily overriding reason. Think of the inhuman things we do in the name of tribal unity. Wars are essentially, and often quite specifically, tribalism. Genocides are tribalism — wipe out the other group to keep our group safe — taken to madness. Racism that lets us feel that our tribe is better than theirs, parents who end contact with their own children when they dare marry someone of a different faith or color, denial of evolution or climate change or other basic scientific truths when they challenge tribal beliefs. What stunning evidence of the power of tribalism!”

When people become supernormally invested in a particular tribe, they become capable of committing all sorts of hideous acts in the name of the tribe which they otherwise would not have committed. They cling so tightly to the beliefs of their tribe that they become willing to kill those who have alternate beliefs. An exceptionally high degree of tribalism is what makes ISIS possible: Existentially disoriented individuals are attracted to the tribe because it seems to offer Absolute Truth, an answer for everything. Once they’ve joined, total loyalty to the tribe is demanded, and the tribe’s “Truth” can never be called into question.

This is why tribalism is dangerous. Of course, the degree of tribalism of most tribes is not on par with that of ISIS, thankfully. One must remain vigilant, though. Few people foresaw the rise of Nazi Germany, but all it took to turn most of the German population into utterly tribalistic death-bringers was a hyper-charismatic leader promising that he had Absolute Political Truth.

You may have picked up on the pattern here. Dangerous, deadly degrees of tribalism frequently arise in groups that are based around some sort of “Absolute Truth,” some fundamental ideology.[1] People tend to be very attracted to the idea of an Absolute Truth because if they find one, it can answer all of their questions and give them the sense that their lives have some sort of grand meaning. It’s easy to see, then, why people in tribes based on a supposed “Absolute Truth,” on an ideology, often become extremely hostile when their ideas are challenged. This is because it isn’t merely their ideas that are being called into question. It’s their tribal ideology, their “Absolute Truth”—the fundamental structure that makes their lives seem meaningful, the basis of their sense of security, stability, and certainty.

The American philosopher Terence McKenna, recognizing this connection between ideology and hostility/violence, was a particularly vocal critic of all forms of dogma and ideology. He once said, “Ideology always paves the way toward atrocity,” and on another occasion, he elaborated on the absurdity of believing one has found an Absolute Truth:

“Ideology kills. It is not a childish game. Ideology kills. I was very amused—I mean, the Heaven’s Gate [mass cult suicide] was a tragedy, but there were these curious aspects to it… I mean, it was a week before Easter. All around me, I heard people saying things like, ‘How could they believe such crazy stuff? And by the way, honey, did you get a dress for service so we can go and celebrate the resurrection of the redeemer?’

You just wonder whose ox is getting gored here? Because something is absolutely nuts—but 400 million people believe it—that makes it okay? But if 20 people believe something… that’s a cult? … I have an absolute horror of belief systems and cults… Belief is hideous. It’s also completely unnecessary. Imagine a monkey walking around with the idea that he or she possesses certain truth. I mean, you have to lack a sense of humor to not see the absurdity of that… We understand what we can understand. We build models. To do this without realizing the tentative nature of the enterprise is just damn foolishness…

That’s why I say: The thing to keep coming back to is the felt presence of immediate experience. Not how do I feel as a feminist or a Marxist or a devotee of Hieronymous Bosch… How do I feel? Period. Not through the filter of ideology. As I look back through history… very few ideologies last very long without going sour or becoming toxic. So why should we assume, as all those naive societies in the past assumed, that we have 95% of the truth and the other 5% will be delivered by our best people in the next 3 years? Fools have always believed that…”

Ideologies are fragile, because they can’t possibly deliver on their promise to contain all the answers. Thus, tribalism mixed with ideology is a dangerous cocktail. Members of an ideology-based tribe can’t openly doubt their tribe’s ideology, and yet all the time (especially in the Internet age) they are bombarded with information and evidence suggesting the incompleteness of their tribe’s “Truth.” This results in immense cognitive dissonance.

Different tribes/people have different methods of dealing with this cognitive dissonance. Some just get really angry and shout at anyone who disagrees with them. Others, like ISIS, decide that everyone who disagrees with them is a blasphemous sodomite who must be annihilated in the name of Allah.

In any case, this dark cocktail of tribalism and ideology is the reason why politics and religion “make everyone crazy.” Luckily, as I’ve said, most tribes are not so extreme/misguided as to inspire the majority of their members to commit atrocities. Again, though, one must remain vigilant. Tribalism in American politics seems to be ramping up to an unsettling degree in recent history. In fact, politically motivated tribalistic violence has been occurring with some regularity the past few months.

How Tribalism Destroys (Political) Discourse

We should note that extreme tribalism need not become violent in order to be deeply destructive and counterproductive. Perhaps the best example is the one with which I opened this essay: the way in which tribalism destroys political discourse.

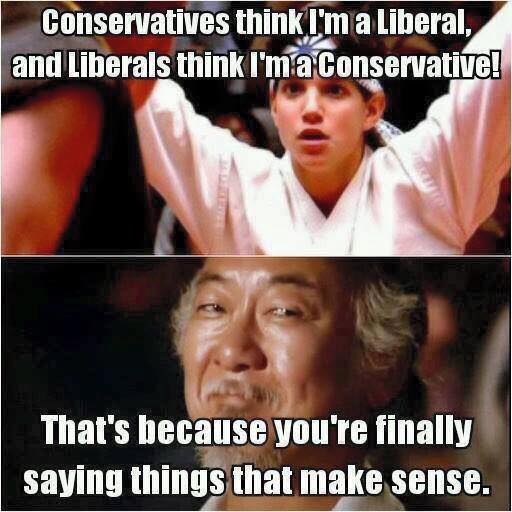

It’s quite easy to tell when a person is deeply tribalistic in their approach to politics. If you find that you’re able to predict their viewpoints on issues such as abortion and climate change, simply because you know their views on some unrelated issue such as gun control, you’re likely dealing with a political tribesman.

Such individuals are often belligerently self-righteous in defending the Moral Rightness of their tribe’s every position, as well as fiercely belittling of the stances of an opposing tribe. Attempting to disagree with such a person is likely to result in immediate defensiveness, derping, and ad hominem attacks, preventing any rational, cooperative discourse from occurring.

(Note: This behavior is not exclusive to those in major political parties. Ideologues of all sorts—anarchists, atheists, SJWs, environmentalists, conspiracy theorists, vegetarians, Mormons, etc. etc. etc.—can become excessively tribalistic to the point where real discourse becomes impossible.)

It’s easy to see how such extreme forms of tribalism make it impossible for productive political thought/dialogue to occur. If people are simply parroting their tribe’s viewpoints, they aren’t researching/analyzing the issues for themselves. And if neither side can even consider the possibility that the other side might have something valuable to say, mutual understanding and compromise become impossible. This results in political systems in which little to nothing gets done and everyone distrusts the hell out of each other. Politics becomes an outmoded and inefficient means of adapting our societies to a rapidly changing world, forcing people to find ways to circumvent politics entirely.

For some things, though—e.g. implementing environmental regulations on large companies—we need a functioning political system. And thus we must attempt to erode the extreme tribalism that has come to define so much of modern political discourse.

Solutions to Extreme Tribalism

Excessive tribalism, as I’ve hopefully established, is often a destructive force. And in its most extreme forms, it’s profoundly dangerous. Here are a few ideas for counteracting and eroding extreme tribalism:

Form/Join Communities Based on Epistemic Virtue

Scott Alexander has said that he thinks one solution/safeguard to the problem of extreme tribalism is to form tribes/communities based on “epistemic virtue.” By epistemic virtue he is referring to openness to many ideas and viewpoints, indefatigable curiosity, a deep lust for the truth, the ability to change views based on evidence, the ability to identify biases and employ rationality, and a commitment to “utter honesty,” as Richard Feynman put it.. “Epistemic virtue” is fundamentally non-dogmatic; this is key. In a memorable passage in one of his essays, Alexander elaborates on “epistemic kindness,” one aspect of epistemic virtue:

“I don’t know how to fix the system, but I am pretty sure that one of the ingredients is kindness.

I think of kindness not only as the moral virtue of volunteering at a soup kitchen or even of living your life to help as many other people as possible, but also as an epistemic virtue. Epistemic kindness is kind of like humility. Kindness to ideas you disagree with. Kindness to positions you want to dismiss as crazy and dismiss with insults and mockery. Kindness that breaks you out of your own arrogance, makes you realize the truth is more important than your own glorification, especially when there’s a lot at stake.”

Any tribe truly based on epistemic virtue would possess a critical self-awareness and an awareness of the dark side of tribalism that would prevent dangerous forms of extremism from arising. Really, any tribe that allows/encourages its members to have many differing perspectives is seriously unlikely ever to have problems with extremism. Unfortunately, of course, most of the tribes in the world are not based on epistemic virtue, and many of them demand that their members hold certain beliefs. The largest tribes are based on racial or national identity, and/or on some kind of fixed, inflexible ideology—e.g. Christianity, Islam, right-wing ideology, left-wing ideology, etc. As I said, this has historically resulted in an inconceivable amount of violence.

But, there is hope: forming/joining tribes based on epistemic virtue and openness to ideas—anti-dogmatic, anti-ideological tribes such as LessWrong, HighExistence, Refine The Mind, etc.—is one effective method of counteracting extreme tribalism.

Subtly Undermine Excessive Certainty

Another means of undermining extreme tribalism is to plant seeds of doubt in the minds of those who are convinced of the correctness of their particular tribal ideology.

The way to do this is not to overtly argue with people or take on a patronizing, all-knowing attitude. Such tactics will provoke defensiveness in the other person and likely cause them to cling more tightly to their precious beliefs.

In general, you’ll have more luck planting seeds of doubt by listening, being respectful/compassionate, and asking sincere questions: “Oh, interesting, can you explain in more detail how that would work?” or “Oh, I see where you’re coming from. What do you think of the idea that X and Y?”

If you can ask the right questions without seeming like a know-it-all, you have a chance of prompting people to examine their own viewpoints. Don’t focus on changing someone’s entire worldview on the spot; that rarely happens. Simply try to plant a seed of doubt that will gradually sprout into a full-fledged worldview-examination at a later time.

(Note: There’s something to be said here for choosing your battles. For instance, no one in the world is likely to benefit from you trying to convince your dear old Christian grandmother that her views are misguided. Such an attempt may, however, cause undue stress and ruin your relationship.)

To do this is, of course, difficult. It’s very tempting to want to prove that someone is wrong in the moment. I fall prey to this temptation frequently. The problem is that this approach just doesn’t work: people will not respond to the greatest arguments and evidence in the world if they feel attacked or cornered.

If you need more evidence of the effectiveness of the “ask the right questions” approach to changing people’s minds, consider this: A 2013 study found that people became far less confident in political policies they supported when prompted to explain how they would actually work. As it turns out, the vast majority of people do not thoroughly research and analyze political issues/policies in order to determine their positions. If they did, they would likely feel far less certain in their stances. Post-rationalist blogger David Chapman explained these findings in an essay of his:

“Contemporary ‘politics’ is mostly about polarized moral opinions. It is now considered normal, or even obligatory, for people to express vehement political opinions about issues they know nothing about, and which do not affect their life in any way. This is harmful and stupid.

‘Political Extremism Is Supported by an Illusion of Understanding’ (Fernbach et al., 2013) applies the Rozenblit method to political explanations. After subjects tried to explain how proposed political programs they supported would actually work, their confidence in them dropped. Subjects realized that their explanations were inadequate, and that they didn’t really understand the programs. This decreased their certainty that they would work. The subjects expressed more moderate opinions, and became less willing to make political donations in support of the programs, after discovering that they didn’t understand them as well as they had thought.

Fernbach et al. found that subjects’ opinions did not moderate when they were asked to explain why they supported their favored political programs. Other experiments have found this usually increases the extremeness of opinions, instead. Generating an explanation for why you support a program, rather than of how it would work, leads to retrieving or inventing justifications, which makes you more certain, not less. These political justifications usually rely on abstract values, appeals to authority, and general principles that require little specific knowledge of the issue. They are impossible to reality-test, and therefore easy to fool yourself with.

Extreme, ignorant political opinions are largely driven by eternalism. I find the Fernbach paper heartening, in showing that people can be shaken out of them. Arguing about politics almost never changes anyone’s mind; explaining, apparently, does.

This suggests a practice: when someone is ranting about a political proposal you disagree with, keep asking them ‘how would that part work?’ Rather than raising objections, see if you can draw them into developing an ever-more-detailed causal explanation. If they succeed, they might change your mind! If not—they might change their own.”

Other Ideas for Eroding Extreme Tribalism

A few other stray ideas related to the prospect of undermining/combatting extreme tribalism and the dogma that often leads to it:

Perpetually grey-pill yourself: We make a mistake if we assume that we ourselves are immune to dogma. Even if you consider yourself “open-minded,” you may still at some point be seduced into thinking you’ve arrived at the Final Answers about something. Thus, it’s imperative to forever challenge your own views, expose yourself to new ideas and information, and perpetually refine/expand your worldview. In an essay I consider essential reading, Venkatesh Rao referred to this process as “grey-pilling” oneself.

Targeted ad campaigns to undermine extremism: In a recent article titled ‘Google’s Clever Plan to Stop Aspiring ISIS Recruits,’ Andy Greenberg explained Google’s latest strategy for deterring extremism among those interested in joining ISIS: using targeted ad campaigns to direct these users to YouTube videos deemed effective in dissuading people from joining ISIS. This strategy has shown promise so far, which is heartening, given that ISIS may well be the most dangerous instantiation of extreme tribalism in the world today. It’s also unsettling to realize that Google is experimenting with using their technological power to change people’s views, as it’s easy to see how this same power could be applied to any number of less-than-benevolent ends.

Empower non-extremist members of political/religious groups to oppose extremist sub-groups: This idea came to me from Sam Harris, whose podcast contains a number of worthwhile discussions on the subject of combatting violent extremism. He discusses it at some length in an essay titled ‘What Hillary Clinton Should Say about Islam and the “War on Terror”.’ Essentially, he argues that “the first line of defense” against the “global jihadist insurgency” of ISIS will always be “members of the Muslim community who refuse to put up with it.” He writes, “We need to empower [peaceful Muslims] in every way we can. Only cooperation between Muslims and non-Muslims can solve these problems.”

The use of force: I am not a Gandhian pacifist, though I am by nature decidedly non-violent and believe that violence should be employed as a solution to problems only when all other courses of action fail. As such, I am wary of making this point and being misinterpreted. Nonetheless, I find it important to note that history suggests that there may be times when the only workable solution for combatting a specific hyper-tribalistic threat is to resort to the use of force. This was arguably the case during WWII, when neither the Nazis nor Imperial Japan would likely have ceased in their genocidal attempts to dominate the world, if not for the forceful intervention by the Allies. It’s possible that the global jihadist insurgency best exemplified by ISIS is a similar sort of threat in our current world, though I do not feel I possess the expertise to make such a claim. If you’d like to think about this matter for yourself, I recommend listening to this episode and this episode of Sam Harris’ podcast.

Conclusion: Extreme Tribalism as an Existential Threat

In sum, tribalism is rampant in (contemporary) politics, making it very difficult for most people to think/converse rationally about political matters. This phenomenon is what prompted LessWrong founder Eliezer Yudkowsky’s now-famous assertion that “politics is the mind-killer.” And this is why I do not affiliate with any political tribe and generally avoid membership in tribes that are not to some extent based on epistemic virtue.

In its most extreme forms, tribalism has historically resulted in (large-scale) violence. This fact alone suggests the importance of combatting extreme tribalism. However, in the 21st century, the risks posed by extreme tribalism are more pressing than ever before.

Given the weapon technologies human beings have now devised and are likely to devise in the not-too-distant future, extreme tribalism in groups with access to nuclear warheads, nanotech weapons, or bioweapons could bring about the extinction of the human species or all intelligent life on Earth.

This is not a joke or a flippant statement. Various global catastrophic risks pose a threat to our species in the coming century, and perhaps the largest among them is the threat of a terrorist group accessing cutting-edge weapon technology. Thus, eroding/counteracting extreme tribalism on a global scale is a matter of utmost importance as humanity progresses through the 21st century and beyond.

This erosion process begins with each of us. We as individuals must have the courage to acknowledge the tribes of which we are a part and inquire honestly as to whether these tribes promote hostility against outgroups, or demand that we hold certain beliefs or attitudes. We must constantly challenge, expand, and refine our own worldviews, seeking forever to avoid dogmatic, unchangeable beliefs.

In the foregoing, I have offered several other ideas for how individuals/groups can counteract extreme tribalism. I hope you found my suggestions useful and thought-provoking, and I encourage you to comment with further ideas.

Here’s to building a more harmonious, cooperative, sustainable human enterprise.

Footnotes:

[1] The term “ideology” has multiple definitions and connotations, making it a somewhat confusing term to use. In this essay I’m using it in the sense which is nearly synonymous with “dogma”—i.e. a set of principles or beliefs laid down as incontrovertibly true. Ideologies purport to explain the world in its entirety and are not to be questioned by their adherents, yet they invariably omit and simplify the true complexity of reality.

Jordan Bates

Jordan Bates is a lover of God, father, leadership coach, heart healer, writer, artist, and long-time co-creator of HighExistence. — www.jordanbates.life

![Seneca’s Groundless Fears: 11 Stoic Principles for Overcoming Panic [Video]](/content/images/size/w600/wp-content/uploads/2020/04/seneca.png)